Bayes Theorem Demystified

Dr. Demystifier (Dr. D) vs. a High School Student Ken

1 Do we need the Bayes theorem?

Can we do everything with the conditionals and marginals?

This is a fictional story of a high school student Ken, and his math teacher Dr. Demystifier (Dr. D). Ken has just learned the Bayes theorem but was utterly mystified by the formula, unaware of its use.

They will discuss the following topics:

- Bayes Theorem Derivation

- Belief Update Once

- Belief Update Twice

- Belief Update Forever

2 Bayes Theorem Derivation

Ken asked Dr. D, “May I ask a question about the Bayes theorem?”

Dr. D nodded and said, “Please do”.

Ken continued, “The Bayes theorem says:

I know how to derive it using the conditional probabilities, which we define using the joint probabilities:

We can re-arrange them to derive the following equality:

Re-arranging them further yields the Bayes theorem:

It seems pretty straightforward, yet I am not very sure how it is useful”.

Dr. D kept his mouth shut, staring at Ken’s face through his pair of sunglasses. He seemed to be deep in thought, and Ken knew that Dr. D would come up with a question to let him realize how to make the best use of the Bayes theorem. However, waiting felt lasting forever, like the heavy rain before a baseball match in his childhood memory.

3 Belief Update Once

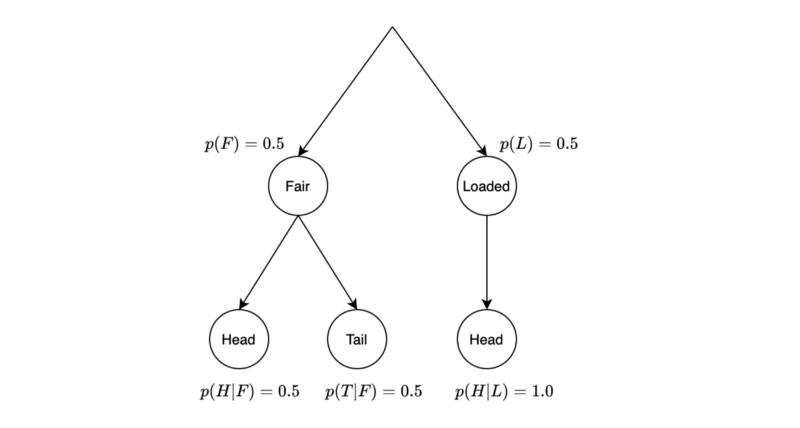

Dr. D opened his mouth, “I have two coins. One coin is fair, and the other is loaded. The fair coin flips to the head with a 50% chance. The loaded coin flips to the head 100% of the time. Suppose you randomly pick one of them, toss it, and the tail shows up. Can you tell me whether it’s a fair coin?”.

Ken immediately answered, “Yes, it’s the fair coin because the loaded one cannot show the tail”.

Dr. D asked another question, “If it was the head, can you tell me if it’s a fair coin?”

Ken answered, “No, I cannot because both coins can flip to the head. But I can say it is more likely to be the loaded one”.

Ken drew a diagram explaining his reasoning.

The probability that we have the loaded coin given the head is the following conditional probability:

So, we need to calculate the marginal probability of the head.

Note: the word marginal means we eliminate a variable from the probability function by summing over the variable. In this example, we eliminate the variable that indicates the coin is fair or loaded.

The joint probability of the head (H) and the fair coin (F) is 0.25.

The joint probability of the head (H) and the loaded coin (L) is 0.5.

So, the marginal probability of the head is 0.75.

The conditional probability of the loaded coin given the head is ⅔.

Ken explained, “Prior to the first flip of the coin, the probability of having the loaded coin was ½. After observing the head from the first flip, our belief that we have the loaded coin has increased from ½ to ⅔”.

Dr. D nodded to each equation Ken drew and said, “Excellent! You’ve already used the Bayes theorem while deriving the conditional probability of the loaded coin given the head”. Dr. D added another equation.

Ken looked at the equation and then said, “I didn’t directly use the Bayes theorem, but I was doing the same calculation. So, it seems to me that we don’t need the Bayes theorem. We only need to know the joint probability, conditional probability, and marginal probability”.

Dr. D agreed with Ken, “Yes, you’re right. You didn’t need the Bayes theorem. So far, so good”.

Ken assumed that Dr. D was just making sure of Ken’s understanding of the basic concepts related to the Bayes theorem.

4 Belief Update Twice

Dr. D said, “Suppose we flip the same coin again and observe the tail, what would be the probability that we’ve picked up the fair coin?”

Ken answered, “That’d be 100% (fair coin) since the loaded one can not flip to the tail”.

“Great!” Dr. D continued, “If it was the head, what would be the probability that we had picked up the loaded one?”

Ken said, “Sure, I can handle this one, too”. He started to explain his steps:

We need to calculate the conditional probability of the loaded coin after observing the head twice in a row.

The marginal probability of observing the head twice is:

Note: the following is true because every flip is an independent event.

The conditional probability of the loaded coin given the head twice in a row is:

Ken concluded, “The probability that we have picked up the loaded coin is now 0.8 after having seen the head twice in a row”.

5 Belief Update Forever

Dr. D said, “Excellent again! Let’s flip the coin for the third time”.

Ken started doubting Dr. D. “Why is he asking the same thing again? What is the purpose of these repeated questions? If we kept going like this, I would need to calculate

Prior to the first flip, the probability of the loaded coin was ½. Post observing the head from the first flip, the conditional probability of the loaded coin became ⅔.

Then, Dr. D drew another equation:

So, we could’ve calculated the same thing with the Bayes theorem.

We call the conditional probability that we picked the loaded coin given the first flip the posterior probability (or just the posterior). It is ⅔ given the first flip (the head), so we believe that the probability of having the loaded coin is ⅔.

When we flip the coin for the second time, our prior belief is the posterior belief from the first flip. Namely,

Then, we can also update the marginal probability of the head using the updated priors:

After observing the second flip (the head), we again update our belief of the loaded coin using the Bayes theorem:

Repeating the same process, the posterior beliefs after the second flip become the prior beliefs for the third flip:

We also update the marginal probability of the head:

After the third flip (the head), the posterior belief of the loaded coin is:

If we keep getting the head, our belief of the loaded coin goes higher and higher, meaning that we believe more and more that the coin is loaded. But if we get the tail once, it becomes zero because the loaded coin has zero chance of the tail.

Instead, our belief in the fair coin would be 100% due to the tail showing up.

We just need more observations to keep updating our beliefs.

Ken told Dr. D, “I can do this forever, thanks to the Bayes theorem!”

Dr. D smiled.

6 References

- Bayes Theorem

Wikipedia