Beam Search for Machine Translation

How Greedy, Exhaustive, and Beam Search Algorithms Work

Beam search is a heuristic technique for choosing the most likely output used by probabilistic sequence models like neural machine translation.

Machine translation is fundamentally multi-label learning where we have multiple plausible answers, yet the model must pick the best possible translation.

We often use greedy search while explaining machine translation models, focusing on core concepts such as the RNN encoder-decoder structure and attention mechanisms. So, we do not talk much about actual output generation.

However, it is a common assumption that we should already know what beam search is. As such, people new to the field might get stuck with the so-called minor detail before reaching the main subject in a paper.

This article discusses how beam search works with the following topics:

- Greedy Search

- Exhaustive Search

- Beam Search

1 Greedy Search

In machine translation, the decoder produces a list of output probabilities.

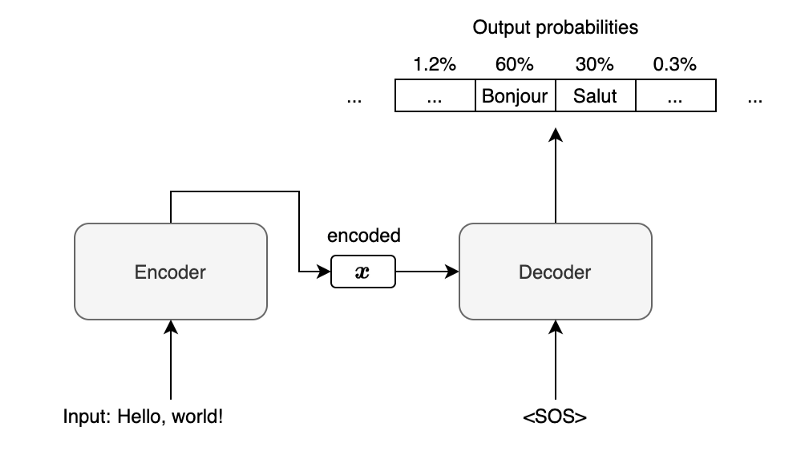

For example, in an English-to-French machine translation, the encoder processes an input English sentence to produce encoded information (vector

Note: <SOS> is a special character indicating the start of a sentence. The decoder receives it as the first input along with the vector

For simplicity, we are not showing any attention mechanism or other details like word embedding. Such details won’t change anything about how beam search works. Our discussion focuses on the method of choosing an output given the list of output probabilities.

In the above diagram, given the input English sentence “Hello, world!”, the output probabilities for the first French word are 60% for “Bonjour”, 30% for “Salut”, and so on. If the output vocabulary includes 100,000 items (words and special characters), there will be 100,000 output probabilities.

In greedy search, we choose “Bonjour” as the model predicts it with the highest probability and use it as the second input to the decoder.

The decoder processes “Bonjour” as the second input and predicts “le” as the most probable second output, which we use as the third input to the decoder. The process continues until the decoder returns <EOS> (the end-of-sentence marker) as the most probable output.

The greedy search might work well if there was always one output with a very high probability. We can not always guarantee that because the more complex sentences would have multiple feasible translation outputs.

So, in general, the greedy search is not the best way and may not work well except for simple translations. Using the greedy search can lead us to the wrong path.

2 Exhaustive Search

With an exhaustive search, we consider searching for all possible outputs.

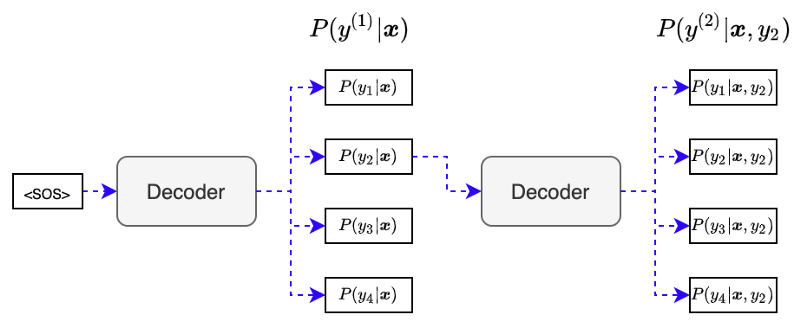

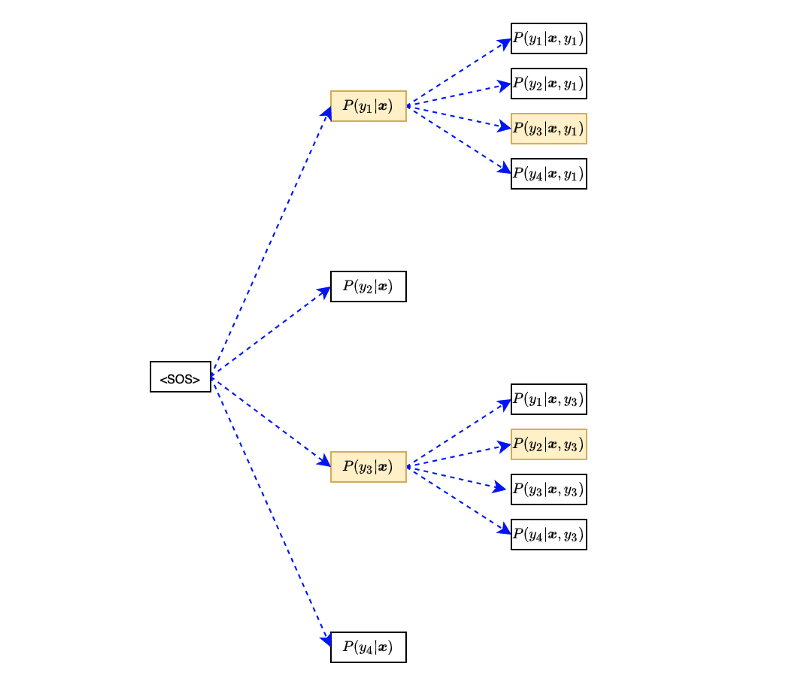

Suppose we have only four items in the output vocabulary. We give <SOS> to the decoder to produce output probabilities:

The first step output probabilities depend on the encoded vector

Each output would have a different probability, but we use each for the next input to the decoder. For example, we give output

The second step output probabilities depend on the encoded vector

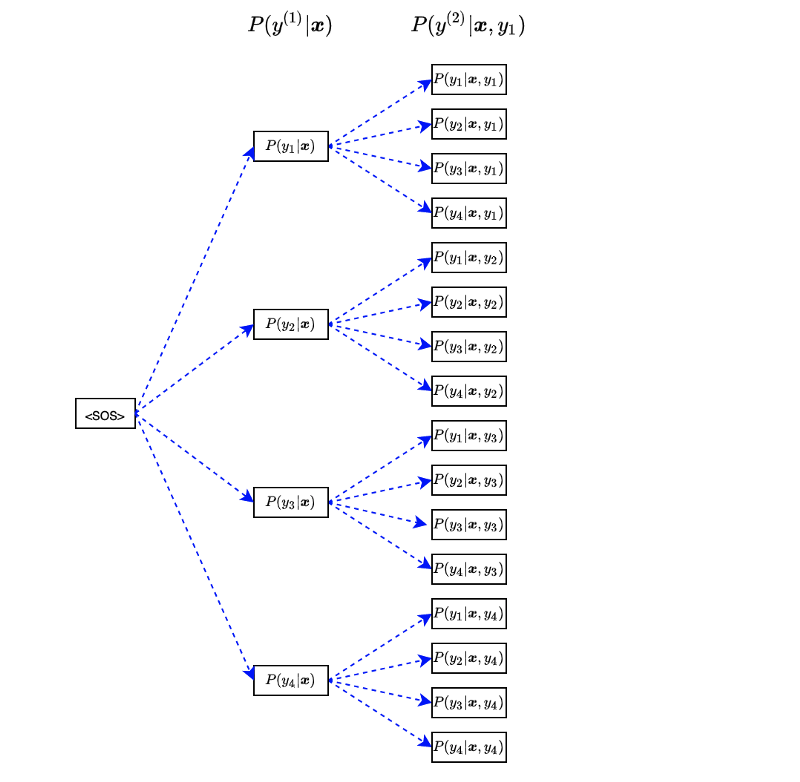

We combine the first-step and second-step probabilities to have the following joint probabilities:

Although it has only two words, we can evaluate which output sequence has the highest probability using the above joint probabilities.

Note: for simplicity, I’m ignoring that one of the possible outputs is <EOS>, so we don’t need to talk about the termination condition. However, we should stop following a path when <EOS> happens.

We repeat the same procedure using output

We combine the first-step and second-step probabilities to have the following joint probabilities:

After processing all of the first outputs, we have 16 joint probabilities.

We can summarize the 16 joint probabilities in the following equation:

Next, we use each of 16 sequences to calculate the joint probabilities for 64 (=16x4) output sentences (containing three words). We can write the joint probabilities in the following equation:

We continue this procedure for four-word, five-word, and so on. Our job is to find a vector

However, the exhaustive search will cause the curse of dimensionality when dealing with many items in vocabulary and long output sentences. If we have 100,000 items in vocabulary and we search for output sentences with ten words, the number of permutations becomes:

So, the exhaustive search becomes very exhausting (pun intended). But seriously, it consumes a lot of memory and computation time. We need something less complete than an exhaustive search, which works better than the greedy search.

3 Beam Search

The beam search strikes a balance between the greedy search and the exhaustive search at the expense of introducing one hyper-parameter

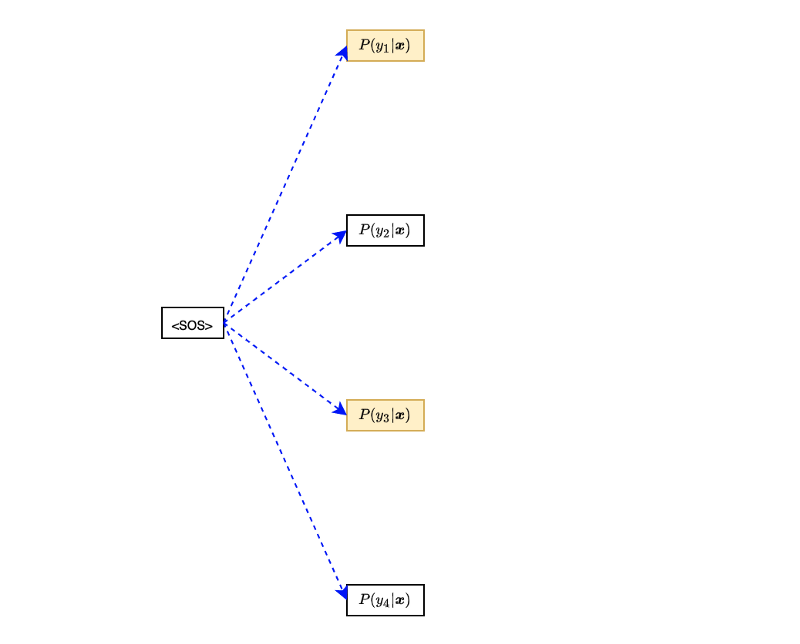

For instance, with β = 2, we only keep the first and the second probable sequence at each step. Let’s go through an example where we have only four items in the output vocabulary:

Suppose outputs

We use only the top two sequences:

When