Calculus of Variations Demystified

How To Solve The Shortest Path Problem

1 How to Derive the Euler-Lagrange Equation

We use the calculus of variations to optimize functionals.

You read it right: functionals, not functions.

But what are functionals? What does a functional look like?

Moreover, there is a thing called the Euler-Lagrange equation.

What is it? How is it useful?

How do we derive such an equation?

You are in the right place if you have any of the above questions.

I’ll demystify it for you.

2 The Shortest Path Problem

Suppose we want to find the shortest path from point

If there is no obstacle, the answer should be a straight line from

But for now, let’s forget that we know the answer.

Instead, let’s frame the problem into an optimization problem and mathematically show that the straight line is the answer.

Doing so lets us understand what functionals and variations are and how to derive the Euler-Lagrange equation to solve the optimization problem.

Before finding the shortest path, we need to be able to calculate the length of a path from

As the famous Chinese proverb says, “A journey of a thousand miles begins with a single step”.

So, let’s calculate the length of a small passage and integrate it from

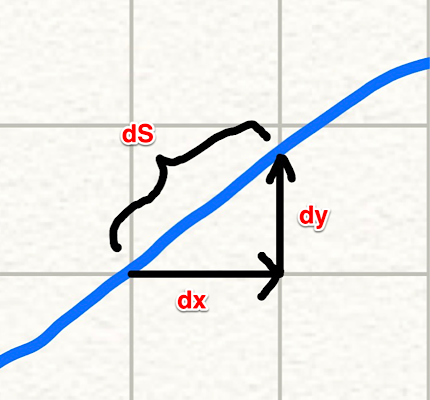

As shown in the above diagram, a small passage can be approximated by a straight line.

So, the total length of a path can be calculated by the following integration:

Since

In the last step,

So, we are integrating the square root from

Now we know how to calculate the length of a path from

We will find the

3 What is a functional?

We know that the

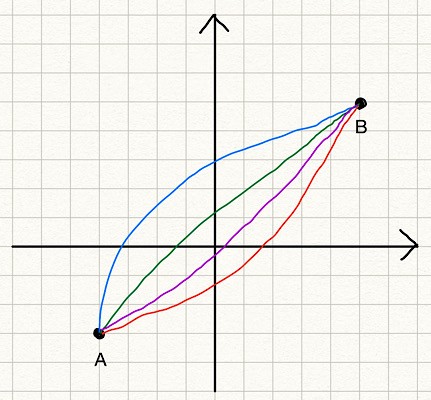

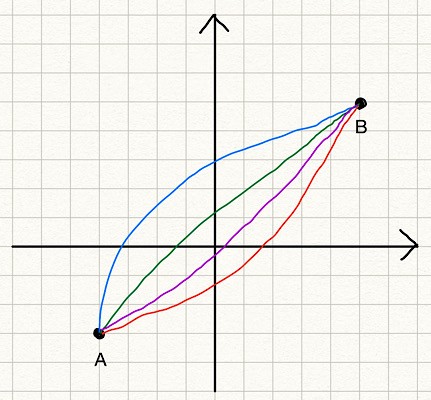

Instead, we consider many potential solution lines from

The blue line is

But having a function name for each line is too tedious, so we call them collectively

In other words,

If we talk about functions of functions like this general, there could be too many kinds of functionals, many of which may not be that useful. Since functional optimization originates from Physics, which deals with positions and velocities, the following general form is very common, and they have many applications (not only in Physics but also in machine learning).

The above may look too abstract. Let’s apply it to our shortest path problem. For the shortest path problem,

We integrate

In the shortest path problem,

That is ok.

The point is that if we can solve the optimization problem for the general form of functionals, we can solve many problems, including the shortest path problem.

4 Functional variations

Before attempting to solve the general form of functionals, let’s think about how to solve the shortest path problem. How about we generate many random functions and evaluate the path length to find the shortest path?

We can map each path to a path length value using the functional

We can take an alternative approach since we know the shortest path exists. We start with the shortest path and slightly modify it to see what kind of condition is required for the shortest path.

Let’s call the shortest path function

If we add an arbitrary function

The term

Let’s apply a variation to the shortest path to make it more concrete. In the below diagram,

- The blue straight line is the shortest path

- The purple line is an example of a variation

Also, note that

Why do we think about the variation?

The reason is that the variation makes the functional optimization into a function optimization, which we know how to solve.

If we look at

Therefore, the only variable in

So, the derivative of

Here, the apostrophe (

In case it’s not clear, an apostrophe is used when we calculate the derivative of a function by the only independent variable. Since

Let’s go back to the general form to reiterate all the points we’ve discussed so far and derive the Euler-Lagrange equation to solve the optimization problem.

5 The Euler-Lagrange equation

The general form of functionals we are dealing with is as follows:

- We say the function

- We define

- Any

Since

We’ve successfully converted the general form of functionals into a function of

So far, we reinforced the same idea from the shortest path problem for the general form of functionals. Now, we are going to solve it for the general form of functionals to derive the Euler-Lagrange equation.

By substituting

Let’s calculate the total derivative of

The first term is zero since

So, the total derivative of

When

Substituting this into the equation

In the second term, we have

The first term is zero due to the boundary conditions:

Therefore,

As

This is called the Euler-Lagrange equation, which is the condition that must be satisfied for the optimal function

6 Solving the shortest path problem

Let’s solve the shortest path problem using the Euler-Lagrange equation.

As mentioned before,

Now, we say

So, we think of

This

Let’s solve the Euler-Lagrange equation for the shortest path problem.

Since

As such, the second term is also zero.

We integrate the above by

Let

Voilà!

If we put the boundary conditions

7 Last but not least

The essential idea of the calculus of variations is to make a functional into a function of

We derived the Euler-Lagrange equation for the general form of functionals, which must be satisfied for solution functions. Mathematically speaking, the Euler-Lagrange equation is not a sufficient condition for the functional minimum or maximum. It just tells us the solution function makes the functional stationary.

However, in many problems, we know the solution would give the functional minimum or maximum. In the shortest path problem, the maximum is unlimited, so we know the solution to the Euler-Lagrange equation gives the minimum function.

In case we want to check if the solution function is for the maximum or minimum, we need to calculate the second derivative of

Let’s do this for the shortest path problem:

The first derivative of

The second derivative of

This is a positive value, so we know the Euler-Lagrange equation for the shortest path problem gives the minimum function. In theory, we should check the value of the second derivative when

When

The solution function (the straight line) given by the Euler-Lagrange equation for the shortest path problem gives the minimum of the functional

Lastly, I assumed all the derivatives are possible in this article. For example,

I hope you have a clearer idea about the calculus of variations now.