GPT-2: Too Dangerous To Release (2019)

The Difference between GPT-1 and GPT-2

GPT-2 is a direct scale-up of GPT-1, with more parameters and trained on more data. However, it was deemed too dangerous to release by OpenAI:

Due to our concerns about malicious applications of the technology, we are not releasing the trained model. As an experiment in responsible disclosure, we are instead releasing a much smaller model for researchers to experiment with, as well as a technical paper. OpenAI Blog – Better Language Models and Their Implications

GPT-1 was released to the public without such serious concerns. Therefore, the above claim made the public wonder how powerful GPT-2 must be in generating texts that look like humans wrote.

Moreover, what’s the difference between GPT-1 and GPT-2?

1 The Difference: GPT-1 vs. GPT-2

In the GPT-1 paper, they experimented with the model on zero-shot task transfer in that they used the pre-trained model with heuristic solutions to perform specific tasks. The experiment’s success suggests that without supervised fine-tuning, the language model already contains information required to perform specific tasks. All that knowledge is stored in network parameters (weights and biases).

In other words, more parameters should increase the capacity of the language model and make it more robust to those specific tasks. In this sense, fine-tuning simply adds the final touch to the model for a specific task, and therefore the main thing that makes GPT-1 great is the pre-training.

So, pre-training such a model with more parameters should improve the model’s performance further. Hence, GPT-2 is a direct scale-up of GPT-1, with more parameters and trained on more data. As such, GPT-1 and GPT-2 are not different in terms of architecture. Both are based on the transformer’s decoder.

However, their main difference is the number of parameters and the amount and variety of training texts that allows the neural network to acquire more language knowledge and understanding and absorb them into its parameters.

The larger model of GPT-2 (that was not released in February 2019) has 1.5 billion parameters, 10 times more than GPT-1. They trained the model on 40GB of web texts and achieved state-of-the-art results on various language modeling, reading comprehension, question answering, and summarization benchmarks.

2 GPT-2: 1.5B Release

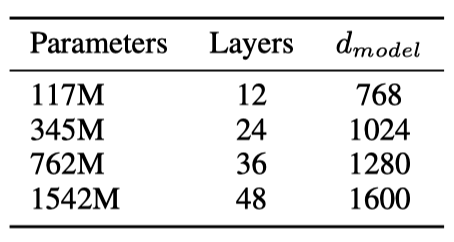

The GPT-2 paper explains that there are four configurations of GPT-2.

The biggest GPT-2 uses 1.5B parameters for 48 decoder blocks with d_model = 1600. Considering the original transformer used six decoder blocks with the embedding dimension (d_model) of 512, the big GPT-2 model is humongous. Successfully training such a huge model itself is a big achievement.

Nine months after the initial announcement of GPT-2, OpenAI decided to release the big GPT-2 with 1.5B parameters along with code and model weights:

We hope that this test case will be useful to developers of future powerful models, and we’re actively continuing the conversation with the AI community on responsible publication.

…

Our experience with GPT-2 over the past nine months has given us valuable insight into the challenges and opportunities for creating responsible publication norms in AI. Open AI Blog – GPT-2: 1.5B Release – November 5, 2019

They summarized their findings from the nine months:

- Humans find GPT-2 outputs convincing.

- GPT-2 can be fine-tuned for misuse.

- Detection is challenging (detection rates of ~95% for detecting 1.5B GPT-2-generated text by RoBERTa).

- We’ve seen no strong evidence of misuse so far.

- We need standards for studying bias.

All these points are valid, and OpenAI did a great job identifying potential risks, especially misuse and biases, at an early stage.

3 GPT-2 vs. ChatGPT

Today (December 2022), we’ve already seen how well ChatGPT performs. So, GPT-2 does not seem so harmful. I can see that they applied what they learned into ChatGPT to prevent misuses, for example, by not impersonating people.

However, many other misuses, like students making ChatGPT do their home, are harder to prevent. These problems will likely persist and become widespread as researchers improve their AI capability. Could teachers use a detection model to find out if students have cheated? It’s getting harder.