Gradient Descent Optimizers

Understanding SGD, Momentum, Nesterov Momentum, AdaGrad, RMSprop, AdaDelta, and ADAM – Made Easy

1 Gradient Descent Optimizers

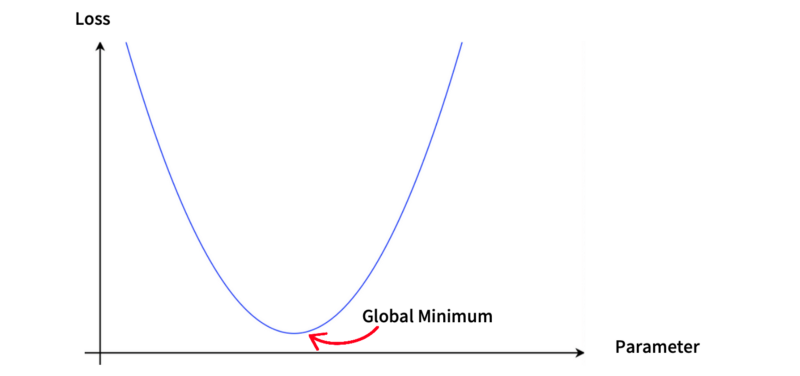

Why do we need Gradient Descent Optimizers? In machine learning, we aim to minimize the difference between predicted and label values. In other words, we want to minimize loss functions. If a loss function is a simple parabola with one parameter, it has one minimum, and we can even solve it with a pen and paper.

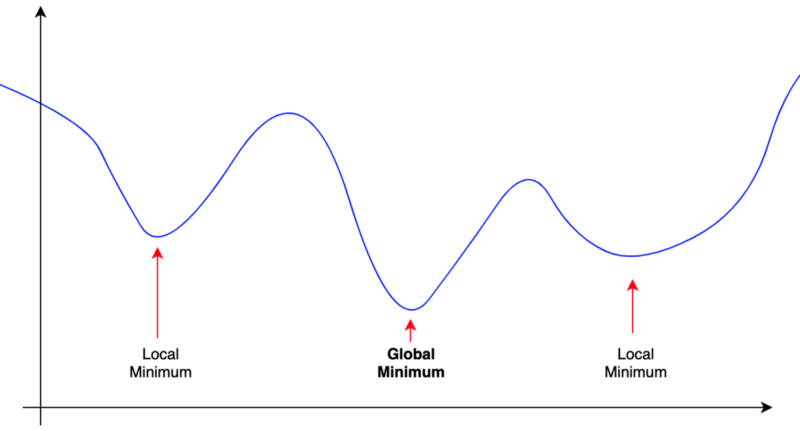

But an actual loss function typically has multiple minima, hopefully with only one global minimum.

We don’t want to get stuck with local minima. We want to climb mountains and continue to explore until we find the global minimum.

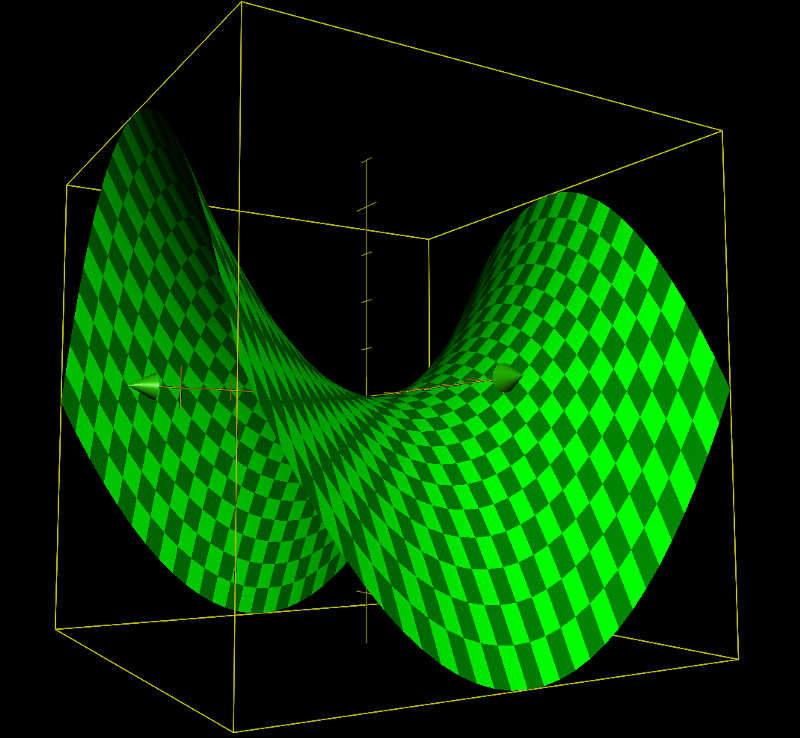

In deep learning, we treat a deep neural network as a function with many parameters like weights and biases. As there are multiple dimensions, there could be saddle points that are both minimum and maximum, like the one below.

In a high-dimensional world, a saddle point may be minimum for some parameters and maximum for others. If the slopes around the saddle point are very shallow, it may not be easy to get out of the saddle point.

Analytically solving the minimization problem with a complex loss function with multiple local minima and saddle points is impractical.

As such, we use a numerical solution like the stochastic gradient descent algorithm by iteratively adjusting parameters to reduce the loss value.

Researchers invented optimizers to avoid getting stuck with local minima and saddle points and find the global minimum as efficiently as possible.

In this article, we discuss the following:

- SGD

- Momentum

- Nesterov Momentum

- AdaGrad

- RMSprop

- AdaDelta

- ADAM

2 Loss Function and the Gradient

Let’s define some terminology first.

A neural network

For example,

A loss function

The smaller the loss value is, the better the quality of the prediction.

In deep learning, we deal with a massive amount of data and often use a batch of input data to adjust network parameters, and a loss value would be an average of loss values from a batch.

The value

In this article, when discussing a loss function, we mean

A gradient is a vector of partial derivatives like the one below:

Each component of a gradient

2.1 SGD

The name SGD came from the stochastic gradient descent algorithm itself, and as such, it is the most straightforward optimizer in this article.

We update each parameter to the opposite direction of the partial derivative.

λ is a learning rate that controls how much to update. It is a hyper-parameter that we must decide for training.

So, we do this for all

We can write the above in one line like below:

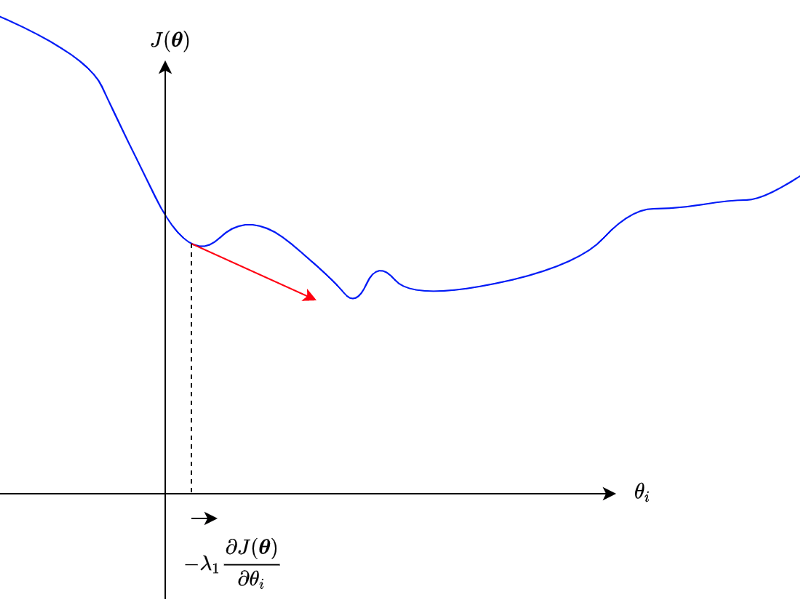

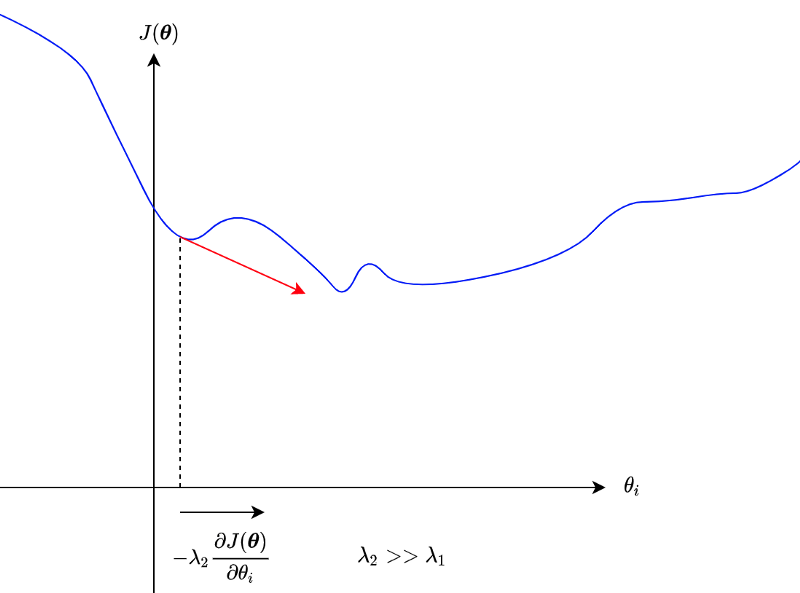

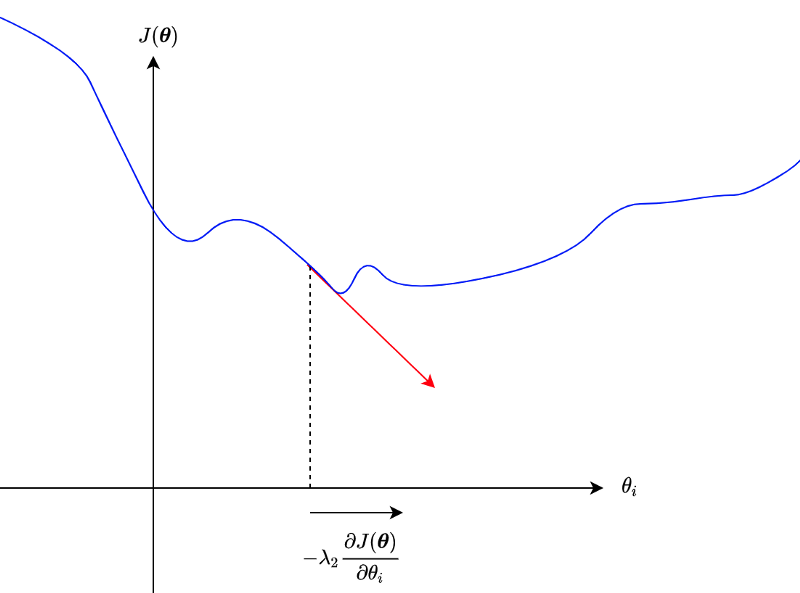

As mentioned, we don’t want to get stuck at a local minimum or a saddle point. But if partial derivatives are small, those parameters may not move much.

We could potentially use a large enough learning rate to go beyond hills.

However, we don’t want to skip the global minimum, either.

As we can see, controlling how much to update parameters is not a trivial task.

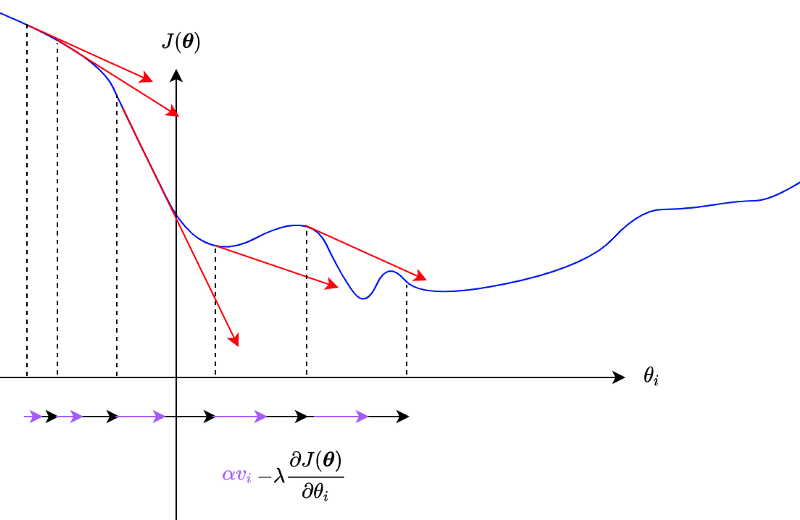

2.2 Momentum

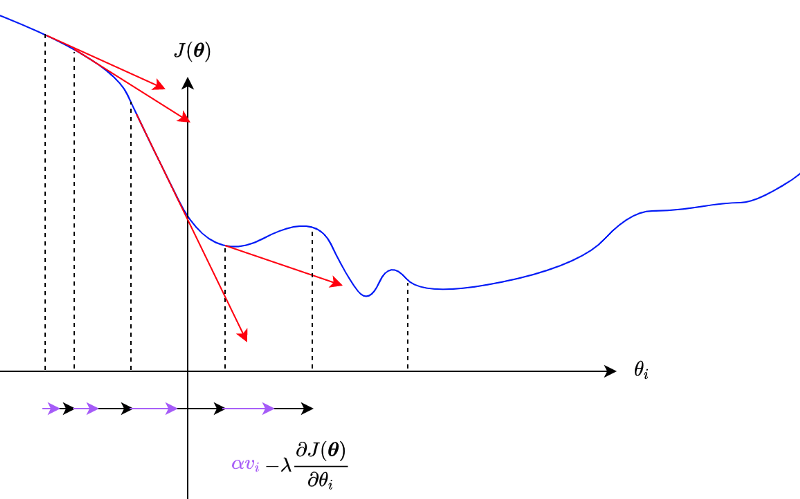

Using momentum is another way to escape from local minima and saddle points.

For each parameter,

A steep slope would add more and more momentum.

We can write it as below for all parameters:

When

The problem with the momentum is that it may overshoot the global minimum due to accumulated gradients.

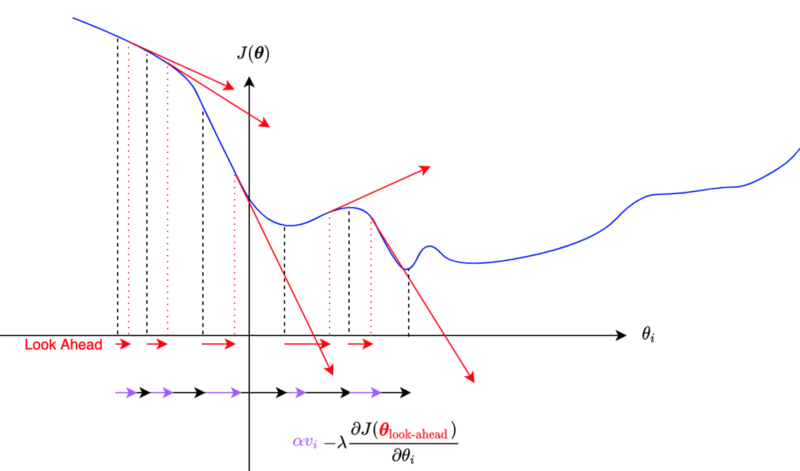

2.3 Nesterov Momentum

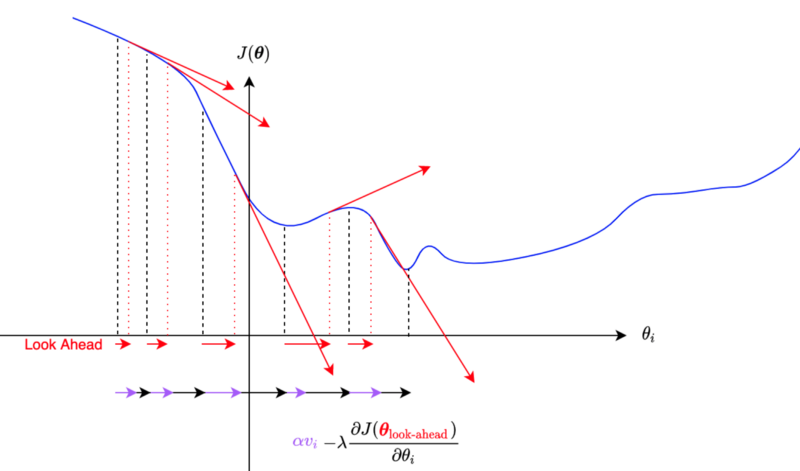

In Nesterov momentum, we calculate gradients at the parameters’ approximated future (look-ahead) position.

We update the momentum by using the partial derivative at an approximate future position of a parameter.

So, the momentum is updated with the gradient at a look-ahead position, incorporating future gradient values into the current parameter update.

If the gradients are getting smaller, the Nesterov momentum will react to that faster than the original momentum algorithm.

Other than using the look-ahead gradient, it is the same as SGD with the original momentum algorithm.

The difference between the original and the Nesterov momentum algorithms is more apparent if we write the loss calculation explicitly:

The original momentum update formula:

The Nesterov momentum update formula:

So, we are calculating the gradient using look-ahead parameters. Suppose the gradient is going to be smaller at the look-ahead position; the momentum will become less even before the parameter moves to the location, which reduces the overshooting problem.

It is like walking down a hill. You can walk fast when the slope continues to be steep, but when you look ahead and see the slope getting shallow, you can start slowing down.

Notes on Learning Rate Scheduler: as an aside, there is another way to control the learning rate using a learning rate scheduler. Deep learning frameworks like TensorFlow and PyTorch provide learning rate schedulers to adjust learning rates. You can gradually reduce (or even increase in some schedulers) the learning rate over the batches/epochs.

2.4 AdaGrad

SGD applies the same learning rate to all parameters. With momentum, parameters may update faster or slower individually.

However, if a parameter has a small partial derivative, it updates very slowly, and the momentum may not help much. Also, we don’t want a parameter with a substantial partial derivative to update too fast.

In 2011, John Duchi et al. published a paper on the AdaGrad (Adaptive Gradient) algorithm.

AdaGrad adjusts the learning rate for each parameter depending on the squared sum of past partial derivatives.

We can write the above for all parameters as follows:

The circle dot symbol means element-wise multiplication. In other words, we square each element of the gradient. The square root on the r is also an element-wise operation.

So, if a parameter has a steep partial derivative, the effective learning rate becomes smaller. For a shallow slope, the effective learning rate becomes relatively more significant.

The algorithm works effectively in some cases, but it has a problem in that it keeps accumulating the squared gradients from the beginning. Depending on where parameters are at initialization, it may be too aggressive to reduce the effective learning rate for some of the parameters.

Geoffrey Hinton solved AdaDelta’s problem with RMSprop.

2.5 RMSprop

In 2012, Geoffrey Hinton proposed RMSprop while teaching online in Coursera. He didn’t publish a paper on this. However, there is a presentation pdf, which we can see. RMSprop became well-known, and both PyTorch and TensorFlow support it.

RMSprop calculates an exponentially decaying average of squared gradients. It’s probably easier to understand by seeing the formula:

So, the effect of older gradients diminishes over the training cycles, which avoids the problem of AdaGrad’s diminishing effective learning rates.

The same thing can be written for all parameters as follows:

We can combine RMSprop with momentum.

There is another variation of AdaGrad, which solves the same problem. It is called AdaDelta.

2.6 AdaDelta

In 2021, Matthew D. Zeiler published a paper on AdaDelta. He developed AdaDelta independently from Hinton, but it has the same idea of using the exponentially decaying average of squared gradients — with a few more tweaks.

AdaDelta calculates a parameter-specific learning rate

The value of

One benefit of the algorithm is that AdaDelta adapts the learning rate for each parameter, and we do not specify a global learning rate as in other optimizers.

Using the adaptable learning rate for a parameter, we can express a parameter delta as follows:

And we update the parameter like the one below:

As for the exponentially decaying average of squared parameter deltas, we calculate like below:

It works like the momentum algorithm maintaining the learning rate to the recent level (provided v stays more or less the same) until the decay kicks in significantly.

We can write the above for all parameters as follows:

However, PyTorch supports a global learning rate for AdaDelta. So, the parameter update of AdaDelta in PyTorch works like the one below:

The default value of lr in PyTorch is 1.0, which works like the original AdaDelta algorithm. Additionally, we can control the global learning rate through a learning rate scheduler when it makes sense.

2.7 ADAM

In 2015, Durk Kingma et al. published a paper on ADAM (Adaptive Moment Estimation) algorithm.

Like RMSprop and AdaDelta, ADAM uses the exponentially decaying average of squared gradients.

I used the β symbol as the coefficient name instead of ρ used in the RMSprop section. The reason for that is ADAM also uses the exponentially decaying average of gradients.

So,

ADAM initializes the exponentially decaying averages

So, ADAM uses the following adjustment:

Since betas are close to 1, the denominators are close to zero, making

The effect of the beta adjustments will eventually diminish as the value of

All in all, the parameter update formula becomes as below:

So, ADAM works like a combination of RMSprop and momentum.

We can write the above for all parameters:

3 References

- Vanishing Gradient Problem

- Adaptive Subgradient Methods for Online Learning and Stochastic Optimization (2011)

John Duchi, Elad Hazan, Yoram Singer - Neural Networks for Machine Learning: Lecture 6a Overview of mini-batch gradient descent (2012)

Geoffrey Hinton, Nitish Srivastava, Kevin Swersky - ADADELTA: An Adaptive Learning Rate Method (2012)

Matthew D. Zeiler - Adam: A Method for Stochastic Optimization (2015)

Diederik P. Kingma, Jimmy Ba