KL Divergence Demystified

What does measuring the similarity of two probability distributions mean?

What does KL stand for? Is it a distance measure? What does it mean to measure the similarity of two probability distributions?

If you want to intuitively understand the KL divergence, you are in the right place. I’ll demystify it for you.

As I will explain from the information theory point of view, knowing the entropy and the cross-entropy concepts is required to apprehend this article thoroughly. If you are not familiar with them, you may want to read the following two articles: one for the entropy and the other for the cross-entropy.

If you are ready, read on.

1 What does KL stand for?

KL in the KL divergence stands for Kullback-Leibler, which represents the following two people:

They introduced the concept of the KL divergence in 1951 (Wikipedia).

2 What is the KL divergence?

The KL divergence tells us how well the probability distribution

As a reminder, I put the cross-entropy and the entropy formula below:

The KL divergence can also be expressed in the expectation form as follows:

The expectation formula can be expressed in the discrete summation form or the continuous integration form:

So, what does it measure? It measures the similarity (or dissimilarity) between two probability distributions.

If so, is it a distance measure?

To answer this question, let’s see a few more characteristics of the KL divergence.

3 The KL divergence is non-negative

The KL divergence is non-negative. An intuitive proof is that:

- If

- If

So, the KL divergence is a non-negative value that indicates how close two probability distributions are.

It does sound like a distance measure, doesn’t it? But it is not.

4 The KL divergence is asymmetric

The KL divergence is not symmetric:

We can deduce the above from the fact that the cross-entropy is asymmetric. The cross-entropy

So, it cannot be a distance measure, as any distance measure should be symmetric.

This asymmetric nature of the KL divergence is a crucial aspect. Let’s look at two examples to understand it intuitively.

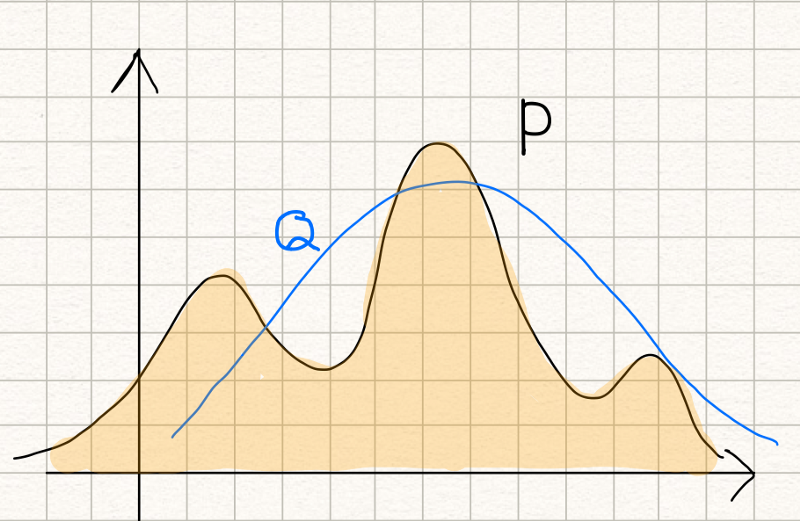

Suppose we have a probability distribution

Now, we want to approximate it with a normal distribution

The KL divergence measures inefficiency in using the probability distribution

If we swap P and Q, it means that we use the probability distribution P to approximate the normal distribution Q, and it’d look like the below:

Both cases measure the similarity between

5 Modeling a true distribution

By approximating a probability distribution with a well-known distribution like the normal distribution, binomial distribution, etc., we are modeling the true distribution with a known one.

This is when we are using the below formula:

Calculating the KL divergence, we can find the model (the distribution and the parameters) that fits the true distribution well.

6 Variational Auto-encoder

An example of using the below formula is the variational auto-encoder.

I will lightly touch on this topic here as it requires much more explanation for people unfamiliar with the variational auto-encoder.

The KL divergence is used to force the distribution of latent variables to be normal so that we can sample latent variables from the normal distribution. As such, it is included in the loss function to improve the similarity between the distribution of latent variables and the normal distribution.

I’ve written an article on VAE in details here.

7 A Few Mathy Points

The term

It is defined as infinity where

A more rigor proof of the KL divergence being non-negative is as follows:

Since

8 Likelihood Ratio

Another way to describe the KL divergence from a probabilistic perspective is to use the following likelihood ratio.

If you are interested in this approach, I recommend the article by Marko Cotra (the link in the references section below).

That is all for now. I hope this article is helpful to you.

9 References

- VAE: Variational Auto-Encoder (2013)

- Cross-Entropy Demystified

- Entropy Demystified

- Kullback Leibler divergence

Wikipedia - CS412 Fall 2008. Introduction to Data Warehousing and Data Mining

Jiawei Han - ECE 830 Fall 2011 Statistical Signal Processing

Robert Nowak - Making sense of the Kullback–Leibler (KL) Divergence

Marko Cotra - A Short Introduction to Entropy, Cross-Entropy, and KL-Divergence

Aurélien Géron