Matrix Decomposition Demystified

Eigen Decomposition, SVD, and Pseudo-inverse Matrix Made Easy

1 Matrix Decomposition

In this article, we discuss the following Matrix Decomposition topics:

- Square Matrix

- Eigenvalue and Eigenvector

- Symmetric Matrix

- Eigen Decomposition

- Orthogonal Matrix

- Singular Value Decomposition

- PSeudo-inverse Matrix

2 Square Matrix

Eigen Decomposition works only with square matrices.

As a quick reminder, let’s look at a square matrix. In square matrices, the number of rows and columns are the same.

For example,

That was easy. Let’s continue with Eigenvalue and Eigenvector concepts.

3 Eigenvalue and Eigenvector

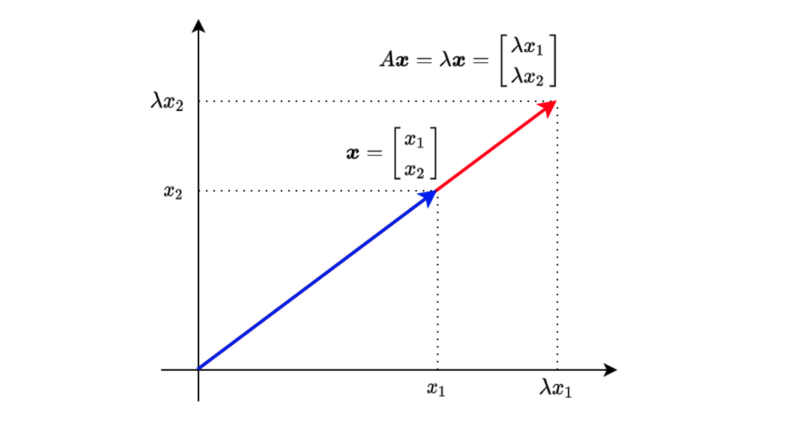

When a square matrix A and a vector

, we call λ eigenvalue and the vector x eigenvector. λ is a scalar.

The above means that multiplying vector

Let’s move everything to the left side of the equation to find the condition for having eigenvalues and eigenvectors.

For vector

Let’s use the 2x2 square matrix as an example to calculate the determinant:

So,

And we calculate the determinant (and we want it to be zero):

Therefore,

Let’s define the eigenvector as follows:

As a reminder, we have the following equation for eigenvalue and eigenvector:

We can calculate the eigenvector

To satisfy the above conditions,

Although t can be any value, we often make the L2 norm to be a size of 1 since, otherwise, we have an infinite number of possible solutions:

The eigenvector for the eigenvalue

or

They are the same, except that one vector direction is the opposite. So, I’ll choose the first one as the eigenvector for

Let’s make sure this works as intended:

We can solve for

For 3×3 or larger matrices, it becomes too tedious to calculate all that manually. We can write a Python script with NumPy to do the same:

import numpy as np

A = np.array([

[1, 2, 3],

[4, 5, 6],

[7, 8, 9]])

print('A=')

print(A)

print()

values, vectors = np.linalg.eig(A)

print('eigenvalues')

print(values)

print()

print('eigenvectors')

print(vectors)Below is the output:

A=

[[1 2 3]

[4 5 6]

[7 8 9]]

eigenvalues

[ 1.61168440e+01 -1.11684397e+00 -1.30367773e-15]

eigenvectors

[[-0.23197069 -0.78583024 0.40824829]

[-0.52532209 -0.08675134 -0.81649658]

[-0.8186735 0.61232756 0.40824829]]We can confirm they work as expected:

λ = values[0]

x = vectors[:, 0]

print('λ * x')

print(λ * x)

print()

print('A @ x')

print(A @ x)Below is the output:

λ * x

[ -3.73863537 -8.46653421 -13.19443305]

A @ x

[ -3.73863537 -8.46653421 -13.19443305]This works fine but if you want to keep the vectors in column format, you can do the following:

λ = values[0]

x = vectors[:, 0:1]

print('λ * x')

print(λ * x)

print()

print('A @ x')

print(A @ x)Below is the output:

λ * x

[[ -3.73863537]

[ -8.46653421]

[-13.19443305]]

A @ x

[[ -3.73863537]

[ -8.46653421]

[-13.19443305]]4 Symmetric Matrix

Eigenvectors of a symmetric matrix are orthogonal to each other. This fact will become helpful later on. So, let’s prove it here.

Suppose

Therefore,

However,

Therefore, eigenvectors of a symmetric matrix are orthogonal to each other.

Now we are ready to discuss how Eigen Decomposition works.

5 Eigen Decomposition

Using eigenvalues and eigenvectors, we can decompose a square matrix A as follows:

Q is a matrix that has eigenvectors in the columns.

We put eigenvalues in descending order to make the diagonal matrix

To prove that such Eigen Decomposition is possible, we adjust the equation slightly:

And we will show that

In other words, AQ is equivalent to each eigenvector inside matrix Q multiplied by the corresponding eigenvalue.

So, we have proved that Eigen Decomposition is possible.

Let’s try Eigen Decomposition with Numpy:

import numpy as np

A = np.array([

[1, 2, 3],

[4, 5, 6],

[7, 8, 9]], dtype=np.float64)

print('A=')

print(A)

print()

# Eigenvalue and Eigenvector

values, vectors = np.linalg.eig(A)

Λ = np.diag(values)

Q = vectors

QΛQinv = Q @ Λ @ np.linalg.inv(Q)

print('QΛQinv=')

print(QΛQinv)Below is the output:

A=

[[1. 2. 3.]

[4. 5. 6.]

[7. 8. 9.]]

QΛQinv=

[[1. 2. 3.]

[4. 5. 6.]

[7. 8. 9.]]6 Orthogonal Matrix

As mentioned before, when matrix A is symmetric, eigenvectors in matrix Q are orthogonal to each other.

When we also make the L2 norm of all eigenvectors 1 (orthonormal), we call Q an orthogonal matrix.

When Q is an orthogonal matrix,

As such,

Therefore,

The above fact will be helpful when we discuss the singular value decomposition.

Let’s do QΛQ^T with Numpy:

import numpy as np

# Asymmetric Matrix

A = np.array([

[1, 2, 3],

[2, 5, 6],

[3, 6, 9]], dtype=np.float64)

print('A=')

print(A)

print()

# Eigenvalue and Eigenvector

values, vectors = np.linalg.eig(A)

Λ = np.diag(values)

Q = vectors

QΛQT = Q @ Λ @ Q.T

print('QΛQT=')

print(QΛQT)Below is the output:

A=

[[1. 2. 3.]

[2. 5. 6.]

[3. 6. 9.]]

QΛQT=

[[1. 2. 3.]

[2. 5. 6.]

[3. 6. 9.]]It’s fun to see the theory works precisely as expected.

Also, let’s check Q is an orthogonal matrix:

QQT = Q @ Q.T

print('QQT=')

print(QQT)Below is the output:

QQT=

[[ 1.00000000e+00 5.55111512e-17 -1.80411242e-16]

[ 5.55111512e-17 1.00000000e+00 0.00000000e+00]

[-1.80411242e-16 0.00000000e+00 1.00000000e+00]]Here we see the limitation of numerical computing. Non-diagonal elements should be 0; instead, some are very small values.

So far, we are dealing with square matrices as Eigen Decomposition works only with square matrices. Next, we’ll see SVD that works with non-square matrices.

7 Singular Value Decomposition

SVD exists for any matrix, even for non-square matrices.

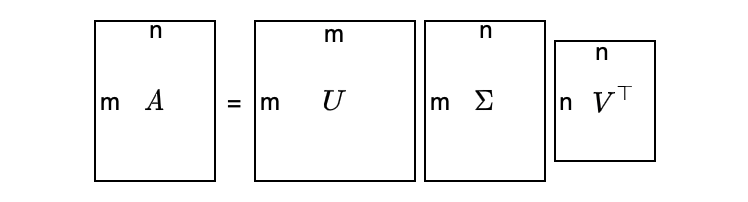

Suppose matrix A is m×n (m ≠ n), we can still decompose matrix A as follows:

It looks too abstract — a visualization may help:

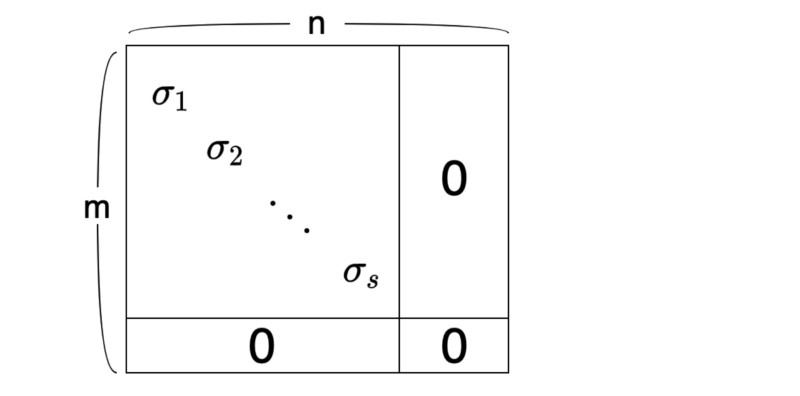

As a convention, we sort the singular values in descending order:

The number of singular values is the same as the rank of matrix A, which is the number of independent column vectors.

So, how can we create such a decomposition?

The main idea is that we make a square matrix like the one below:

or

We can choose either, but using the one with fewer elements is easier.

For example, if matrix A is 100×10,000.

Suppose we perform SVD on matrix A using

where

is a diagonal matrix (m×m) with squared sigmas as the top-left diagonal elements. These values are eigenvalues of AA^T since we have eigen-decomposed

The column vectors in matrix U are eigenvectors because:

In other words, if we can eigen-decompose

We can consider the same calculation with

where

is a diagonal matrix (n×n) with squared sigmas as the top-left diagonal elements. These values are eigenvalues since we eigen-decompose

The column vectors in matrix V are eigenvectors because:

In other words, if we can eigen-decompose A^TA, we can calculate V and diagonal sigmas, and then we can calculate U.

So, if we eigen-decompose either

Again, we should choose a smaller one of

Let’s try SVD with Numpy:

import numpy as np

A = np.array([

[1, 2],

[3, 4],

[5, 6]], dtype=np.float64)

print('A=')

print(A)

print()

U, S, VT = np.linalg.svd(A)

print('U=')

print(U)

print()

print('S=')

print(S)

print()

print('VT=')

print(VT)

print()Below is the output:

A=

[[1. 2.]

[3. 4.]

[5. 6.]]

U=

[[-0.2298477 0.88346102 0.40824829]

[-0.52474482 0.24078249 -0.81649658]

[-0.81964194 -0.40189603 0.40824829]]

S=

[9.52551809 0.51430058]

VT=

[[-0.61962948 -0.78489445]

[-0.78489445 0.61962948]]Let’s set up matrix Σ as follows:

Σ = np.zeros_like(A, dtype=np.float64)

Σ[:2] = np.diag(S)

print('Σ=')

print(Σ)

print()Below is the output:

Σ=

[[9.52551809 0. ]

[0. 0.51430058]

[0. 0. ]]Now, we can confirm

print('UΣVT=')

print(U @ Σ @ VT)

print()Below is the output:

UΣVT=

[[1. 2.]

[3. 4.]

[5. 6.]]Now that we know how SVD works, we are ready to work on pseudo-inverse matrices.

8 Pseudo-inverse Matrix

An inverse matrix does not always exist, even for a square matrix. However, a pseudo-inverse — also called Moore Penrose inverse — matrix exists for non-square matrices.

For example, matrix A is m×n.

Using a pseudo-inverse matrix

We define a pseudo-inverse matrix

V and U are from SVD:

We make

Then

Now, we can see how

In the same way,

In summary, if we can perform SVD on matrix A, we can calculate A^+ by VD^+UT, which is a pseudo-inverse matrix of A.

Let’s try Pseudo-inverse with Numpy:

import numpy as np

A = np.array([

[1, 2],

[3, 4],

[5, 6]], dtype=np.float64)

AP = np.linalg.pinv(A)

print(‘AP @ A’)

print(AP @ A)The below is the output:

AP @ A

[[ 1.0000000000000004e+00 0.0000000000000000e+00]

[-4.4408920985006262e-16 9.9999999999999956e-01]]Congratulations! You’ve made it this far. I hope you’ve enjoyed it!

9 References

- Eigendecomposition of a matrix

Wikipedia - Singular Value Decomposition

Wikipedia - Moore Penrose inverse

Wikipedia - Up-sampling with Transposed Convolution